Research Groups

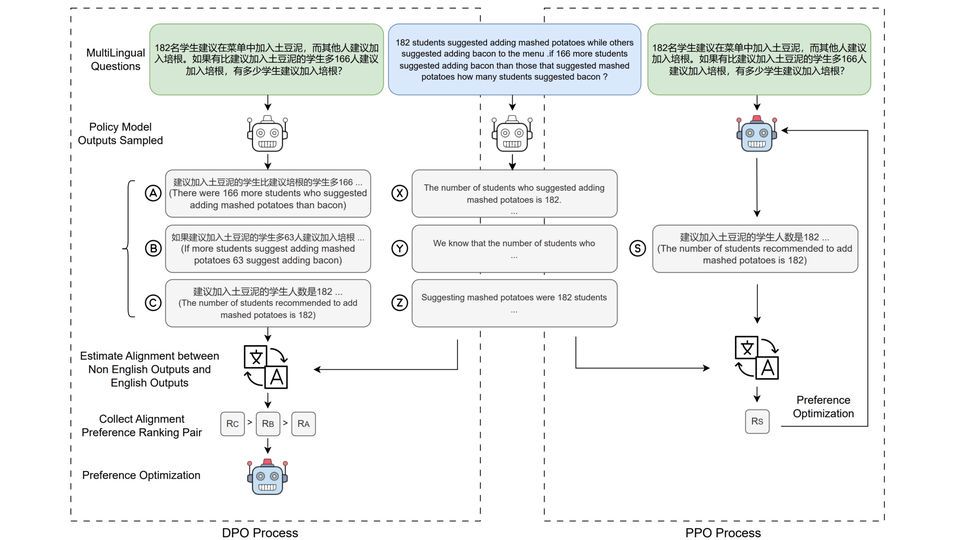

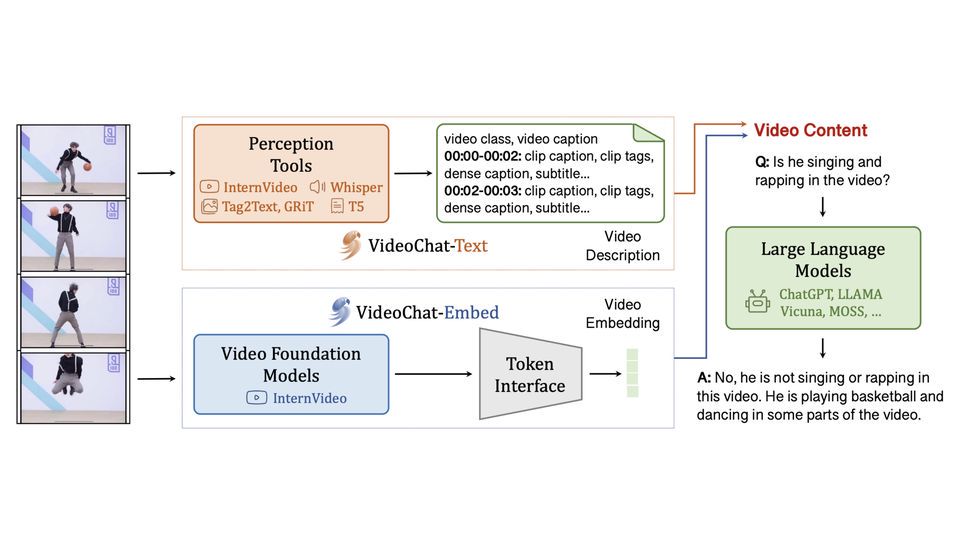

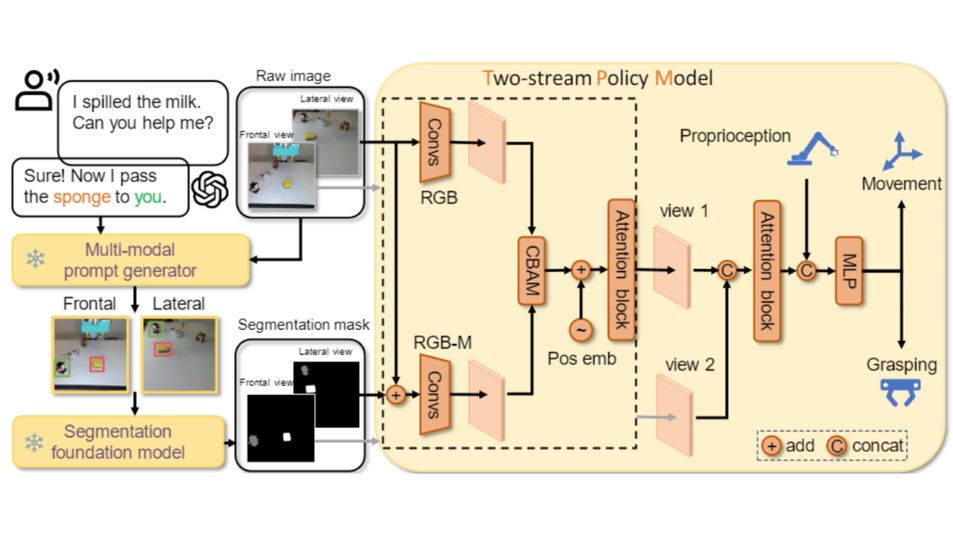

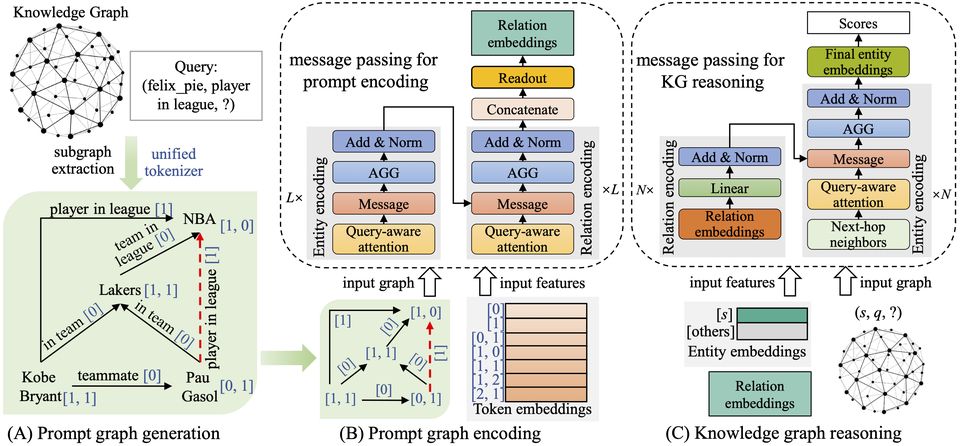

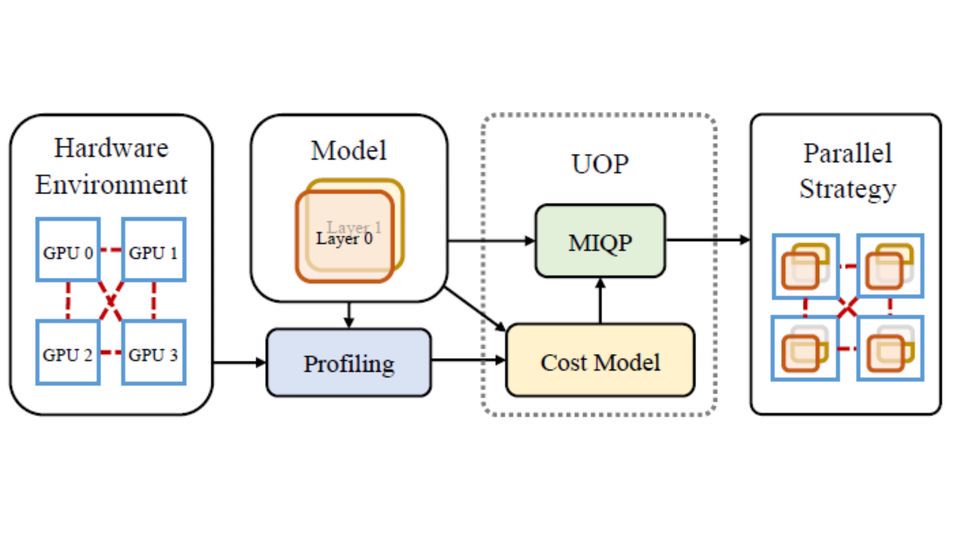

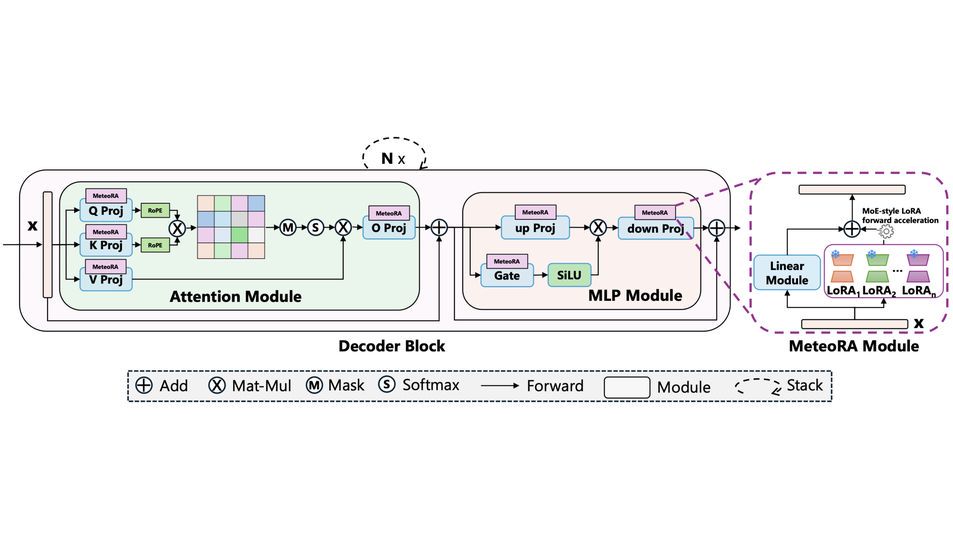

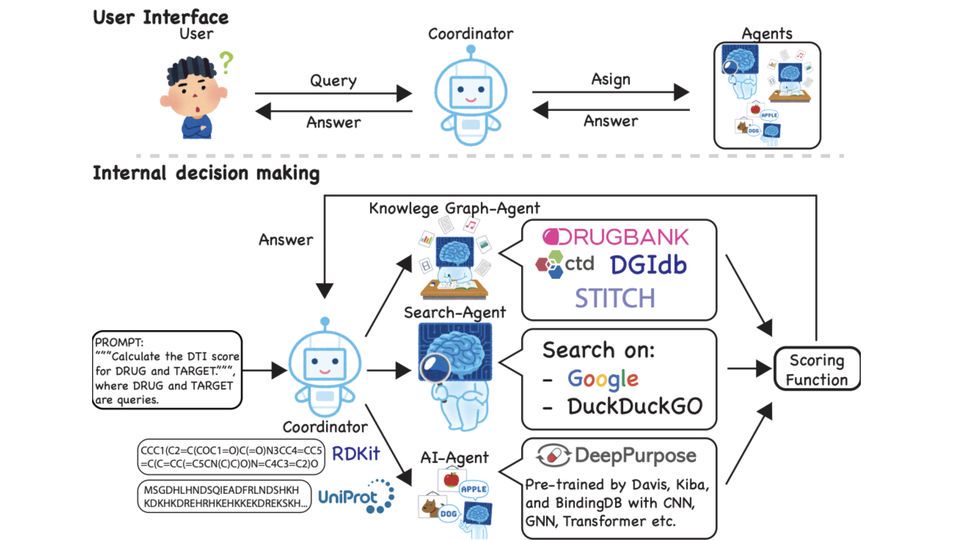

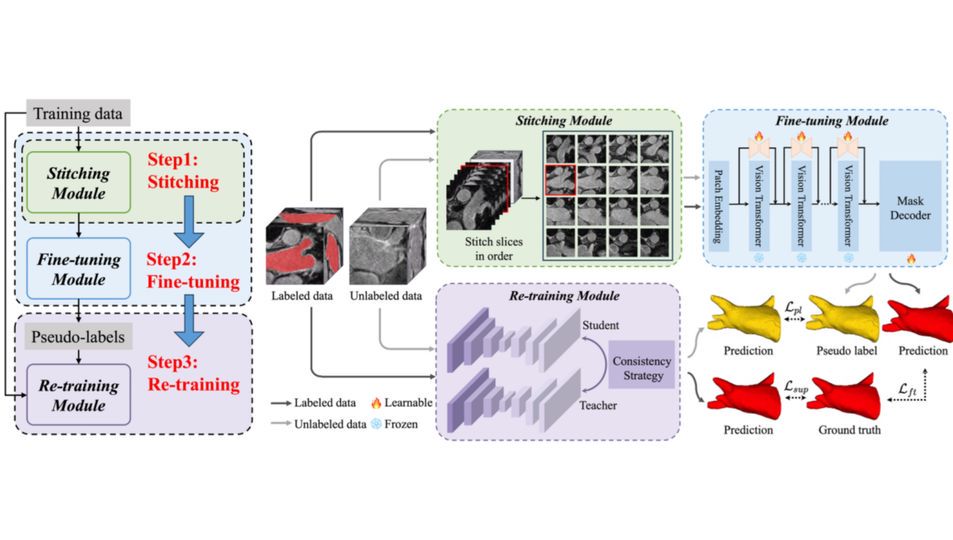

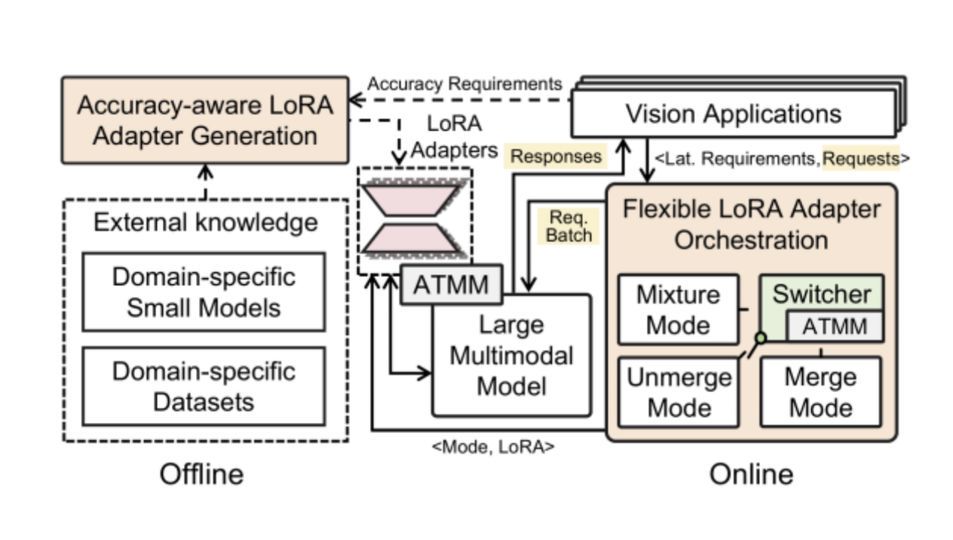

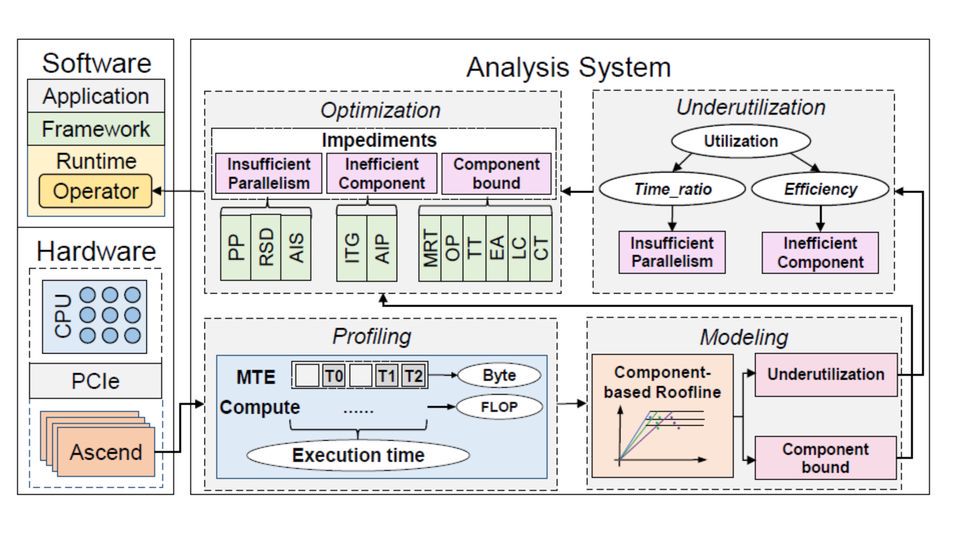

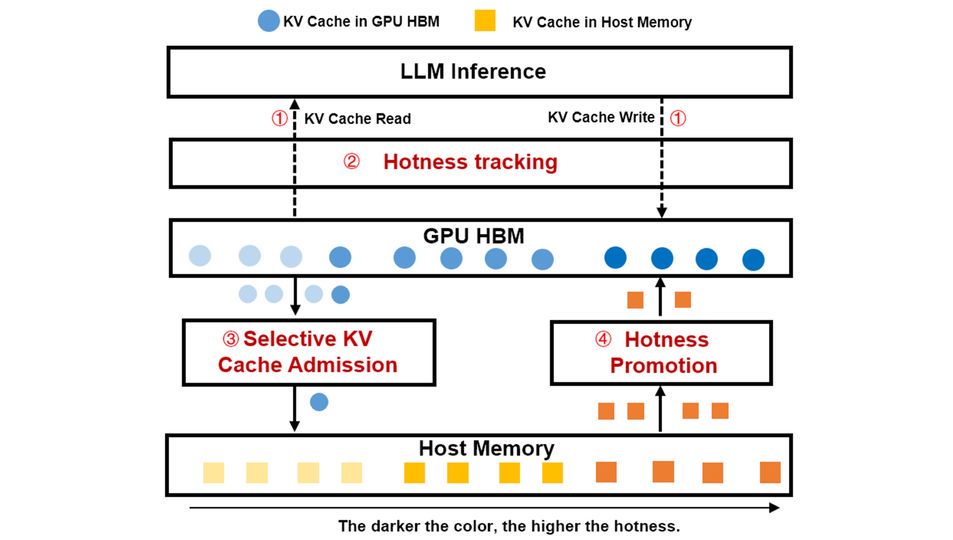

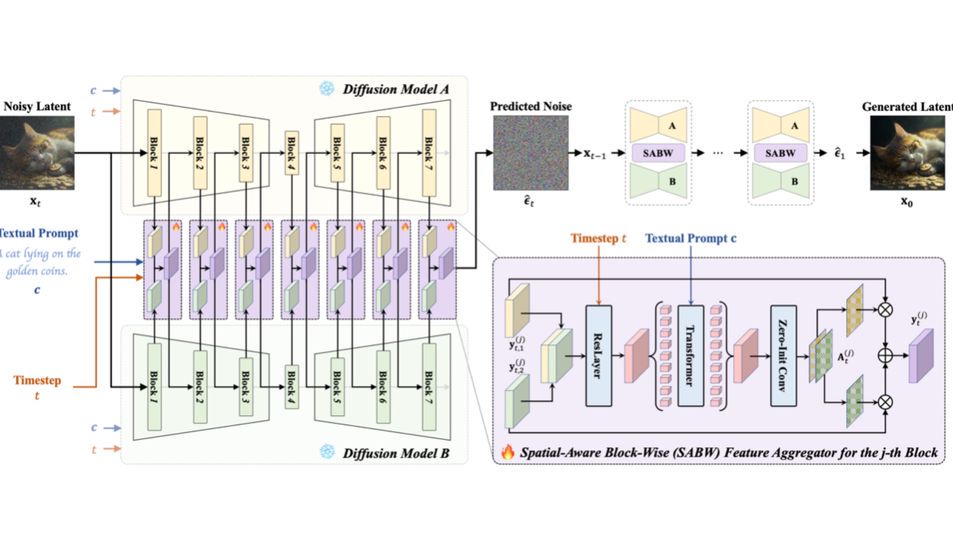

The Large Model Center conducts innovative research on large model system architectures, learning algorithms, and domain applications, providing core technologies for the next generation of artificial intelligence. The main research directions include scalable system architectures for large models, high-performance machine learning algorithms and platforms for large models, large model knowledge-enhanced learning algorithms, as well as language large models, multimodal large models, scientific large models, embodied decision-making large models, intelligent agent systems, and neural-symbolic reasoning systems, etc.